The Rig

The virtual model rig used by Monk is a combination of pieced together hardware and software.

Hardware

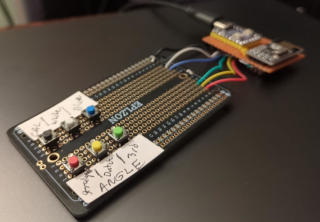

The picture here shows the basic hardware used for non-optical motion tracking.

Tracking is done non-optically using inexpensive Inertial Measurement Units (IMUs). These IMUs, the MPU-6050, combines accelerometer and gyroscope data in a black-box Digital Motion Processor (DMP). There's no official documentation to get the DMP working and it's only mentioned by name in product briefs. A couple of C libraries have successfully reverse-engineered these chips, though. Using these DMPs offload some processing from the microcontroller onto the IMUs.

The IMUs are connected to a microcontroller, the RP2040, to lightly process and format the IMU data. The microcontroller then transmits this data over an nRF24 radio to the receiver. The receiver is an nRF24 and RP2040 connected to a host computer running software (see below).

On the right of the picture above is the wearable two IMUs, battery pack, and microcontroller/radio box. One IMU is worn on glasses and one IMU on a chest harness; the central box of the wearable attaches to the wearer's shoulder. On the lower left of the picture is the receiver unit. The upper left is some test hardware consisting of an IMU and a microcontroller sandwiched together.

The receiver board was later modified with an additional button board for regular live events, such as camera controls and zeroing the model's quaternions when drift occurs.

This tracking system is a slightly different but somewhat related concept to what Wii remotes used. In the case of the Wii remote, only accelerometer data was used to find gravity and directional forces. Positioning of the Wii remote was done using the infrared sensor bar. The concept used by the Wii remote is probably easier and more efficient in a lot of ways. On the other hand, it requires a stable optical setup (the IR sensor bar), which this rig's setup avoids in order to remain non-optical.

Software

Centrally in the current software is Unity. Unity acts as the engine to control and render the virtual 3D world from the hardware setup. Code written to run in Unity reads from the receiver unit over a serial connection. This code translates the quaternions sent over the air to the Unity world and then transforms 3D objects accordingly. The 3D head model contains a skeleton so that only rotational data from the IMUs is needed: a relative position can be computed from the rotational data and the virtual skeleton alone. Rotational data from the small, inexpensive IMUs is prone to drift, so some filtering and calibration is necessary.

Future

This setup could theoretically be expanded to control a larger rig, such as arms, hands, and legs. However, the drift from the MPU-6050 would lead to compounding error in the motion tracking. Also, the nRF24 module has a technical channel limition that would need to be overcome after a couple more transmitters are added to the rig. It's not impossible to overcome that limitation, but other limitations or controller time budgets / syncing issues may appear over the hill. Unity may also be replaced (I've used other engines and wanted to try out Unity; the difficult part of the rig was the hardware programming and quaternion wrangling, not Unity).